A Tutorial on Singular Value Decomposition

Preface

Under some circumstance, we want to compress data to save storage space. For example, when iPhone7 was released, many were trapped in a dilemma: Should I buy a 32G iPhone without enough free space or that of 128G with a lot of storage being wasted? I had been trapped in such dilemma indeed. I still remember that I only had 8G storage totally when I was using my first Android phone. What annoyed me most was my thousands of photos. Well, I confess that I was being always a mad picture taker. I knew that there were some technique which could compress a picture through reducing pixel. However, it is not enough, because, as you know, in some arbitrary position in a picture, we can tell that the picture share the same color. An extreme Example: if we have a pure color picture, what we just need know is the RGB value and the size, then reproducing the picture is done without extra effort. What I was dreaming is done perfectly by Singular Value Decomposition(SVD).

Introduction

Before SVD, in this article, I will introduce some mathmatical concepts in the first place which cover Linear transformation and EigenVector&EigenValue. This Background knowledge is meant to make SVD straightforward. You can skip if you are familar with this knowledge.

Linear transformation

Given a matrice \(A\) and vector \(\vec{x}\), we want to compute the mulplication of \(A\) and \(\vec{x}\)

\[\vec{x}=\begin{pmatrix}1\\3\end{pmatrix}\qquad A=\begin{pmatrix}2 & 1 \\ -1 & 1 \end{pmatrix}\qquad\vec{y}=A\vec{x}\]

But when we do this multiplication, what happens? Acutually, when we multiply \(A\) and \(\vec{x}\), we are changing the coordinate axes of the vector \(x\) to another new axes. Begin with a simpler example, we let

\[A=\begin{pmatrix}1 & 0\\ 0 &1\end{pmatrix}\]

then we have \[A\vec{x}=\begin{pmatrix}1 & 0\\ 0 &1\end{pmatrix}\begin{pmatrix}1\\3\end{pmatrix}=\begin{pmatrix}1\\3\end{pmatrix}\]

You may have noticed that we can always get the same \(\vec{x}\) after left multiply by A. In this case, we use coordinate axes \(i=\begin{pmatrix}1 \\ 0\end{pmatrix}\) and \(j=\begin{pmatrix}0 \\ 1\end{pmatrix}\) as the figure below demonstrated. That is, if we want to represent \(\begin{pmatrix}1\\3\end{pmatrix}\) under the coordination, we can calculate the transformation as followed:

\[\begin{align} A\vec{x}=1\cdot i + 3\cdot j = 1\cdot \begin{pmatrix}1 \\ 0\end{pmatrix} + 3\cdot \begin{pmatrix}0 \\ 1\end{pmatrix}=\begin{pmatrix}1\\3\end{pmatrix}\end{align}\]

As we know, we can put a vector to anywhere in space, and if we want to calculate sum of two vectors, the simplest way is to connect the to vector from one's head to the other's tail. Our example, we compute \(A\vec{x}\) means add two vector(green imaginary lines) up. And the answer is still \(\begin{pmatrix}1\\3\end{pmatrix}\).

Now we change \(i=\begin{pmatrix}2\\ -1\end{pmatrix}\) and \(j=\begin{pmatrix}1\\1\end{pmatrix}\) as the coordinate axes(the red vectors), which means \(A=\begin{pmatrix}2 & 1 \\ -1 & 1\end{pmatrix}\). I put vectors(black ones) to this figure as well. We can see what happens when we change a new coordinate axes.

First of all, we multiply \(j\) by \(3\) and \(i\) by 1. Then we move vector j and let the head of \(i\) connect the tail of \(3\cdot j\). We can now find what is the coordination of \(1\cdot i+3\cdot j\)(the blue one). We now verify the result using mutiplication of \(A\) and \(\vec{x}\):

\[A\vec{x}=\begin{pmatrix}2 & 1 \\ -1 & 1\end{pmatrix}\begin{pmatrix}1\\3\end{pmatrix}=1\cdot \begin{pmatrix}2 \\ -1\end{pmatrix} + 3\cdot \begin{pmatrix}1 \\ 1\end{pmatrix}=\begin{pmatrix}5\\2\end{pmatrix}\]

Here, you can imagine that matrice \(A\) is just like a function \(f(x)\rightarrow y\), when you subsitute

\(x\), we get the exact \(y\) using the principle \(f(x)\rightarrow y\). In fact, the

multiplication is tranform the vector from one coordination to

another.

Here, you can imagine that matrice \(A\) is just like a function \(f(x)\rightarrow y\), when you subsitute

\(x\), we get the exact \(y\) using the principle \(f(x)\rightarrow y\). In fact, the

multiplication is tranform the vector from one coordination to

another.

Exercise

- \(A=\begin{pmatrix}1 & 2 \\ 3 & 4\end{pmatrix}\), draw the picture to stretch and rotate \(x=\begin{pmatrix}1\\3\end{pmatrix}\).

- Find a \(A\) matrix to rotate \(\vec{x}=\begin{pmatrix}1\\3\end{pmatrix}\) to \(90^{\circ}\) and \(180^{\circ}\).

- what if \(A=\begin{pmatrix}1 & 2 \\ 2 & 4\end{pmatrix}\).

EigenVector and EigenValue

EigenVector and EigenValue is an extremely important concept in linear algebra, and is commonly used everywhere including SVD we are talking today. However, many do not know how to interpret it. In fact, EigenVector and EigenValue is very easy as long as we know about what is linear transformation.

A Problem

Before start, let's take a look at a question: if we want to multiply matrices for 1000 times, how to calculate effectively? \[AAA\cdots A= \begin{pmatrix}3& 1 \\0 & 2\end{pmatrix}\begin{pmatrix}3& 1 \\0 & 2\end{pmatrix}\begin{pmatrix}3& 1 \\0 & 2\end{pmatrix}\cdots \begin{pmatrix}3& 1 \\0 & 2\end{pmatrix}=\prod_{i=1}^{1000}\begin{pmatrix}3& 1 \\0 & 2\end{pmatrix}\]

Intuition

Last section, we have talked that if we multiply a vector by a matrix \(A\), means that we use \(A\) to stretch and rotate the vector in order to represent the vector in a new coordinate axes. However, there are some vectors for \(A\), they can only be stretched but can not be rotated. Assume \(A=\begin{pmatrix}3 & 1 \\ 0 & 2\end{pmatrix}\), let \(\vec{x}=\begin{pmatrix}1 \\ -1\end{pmatrix}\). When we multiply \(A\) and \(\vec{x}\)

\[A\vec{x}=\begin{pmatrix}3 & 1 \\ 0 & 2\end{pmatrix}\begin{pmatrix}1 \\ -1\end{pmatrix}=\begin{pmatrix}2 \\ -2\end{pmatrix}=2\cdot \begin{pmatrix}1 \\ -1\end{pmatrix}\]

It turns out we can choose any vector along \(\vec{x}\), the outcome is the same, for example:

\[A\vec{x}=\begin{pmatrix}3 & 1 \\ 0 & 2\end{pmatrix}\begin{pmatrix}-3 \\ 3\end{pmatrix}=\begin{pmatrix}-6 \\ -6\end{pmatrix}=2\cdot \begin{pmatrix}-3 \\ 3\end{pmatrix}\]

We name vectors like \(\begin{pmatrix}-3 \\ 3\end{pmatrix}\) and \(\begin{pmatrix}1 \\ -1\end{pmatrix}\) EigenVectors and 2 the conresponse EigenValues. In practice, we usually choose unit eigenvectors(length equals to 1) given that there are innumerable EigenVectors along the line.

I won't cover how to compute these vectors and vaules and just list the answer as followed

\[\begin{align}&\begin{pmatrix}3 & 1 \\0& 2\end{pmatrix} \begin{pmatrix}{-1}/{\sqrt(2)} \\ {1}/{\sqrt(2)}\end{pmatrix}= 2\begin{pmatrix}{-1}/{\sqrt(2)} \\ {1}/{\sqrt(2)}\end{pmatrix} \qquad\qquad\vec{x_1}=\begin{pmatrix}{-1}/{\sqrt(2)} \\ {1}/{\sqrt(2)}\end{pmatrix} &\lambda_1=2\\ &\begin{pmatrix}3 & 1 \\0& 2\end{pmatrix} \begin{pmatrix}1 \\ 0\end{pmatrix}\qquad=\qquad 3\begin{pmatrix}1 \\ 0\end{pmatrix}\qquad\qquad\quad\,\,\,\vec{x_2}=\begin{pmatrix}1 \\ 0\end{pmatrix} &\lambda_2=3 \end{align}\] Notice that \(|\vec{x_1}|=1\) and \(|\vec{x_2}|=1\) #### EigenValue Decomposition If we put two EigenVectors and corresponding EigenValues together, we can get the following equation: \[AQ=\begin{pmatrix}3 & 1 \\0& 2\end{pmatrix} \begin{pmatrix} {-1}/{\sqrt(2)}&1\\ {1}/{\sqrt(2)}&0 \end{pmatrix}= \begin{pmatrix} {-1}/{\sqrt(2)}&1 \\ {1}/{\sqrt(2)}&0 \end{pmatrix} \begin{pmatrix} 2 & 0\\ 0 & 3 \end{pmatrix}=Q\Lambda \] Then we have \(AQ=Q\Lambda\), the conclusion is still right if we introduce more dimensions, that is \[\begin{align} A\vec{x_1}=\lambda\vec{x_1}\\ A\vec{x_2}=\lambda\vec{x_2}\\ \vdots\qquad\\ A\vec{x_k}=\lambda\vec{x_k} \end{align}\]

\[Q= \begin{pmatrix} x_{11}& x_{21} &\cdots x_{k1}&\\ x_{12}& x_{22} &\cdots x_{k2}&\\ &\vdots&&\\ x_{1m}& x_{22} &\cdots x_{km}& \end{pmatrix} \qquad\Lambda= \begin{pmatrix} \lambda_1 & 0 & \cdots&0\\ 0 &\lambda_2&\cdots&0\\ \vdots&\vdots&\ddots\\ 0&\cdots&\cdots&\lambda_k \end{pmatrix}\]

If we do something on the equation \(AQ=Q\Lambda\), then we have: \[AQQ^{-1}=A=Q\Lambda Q^{-1}\] It is EigenVaule Decomposition. #### Resolution Now, Let's look at the question in the beginning of this section \[\begin{align} AAA\cdots A&= \begin{pmatrix}3& 1 \\0 & 2\end{pmatrix}\begin{pmatrix}3& 1 \\0 & 2\end{pmatrix}\begin{pmatrix}3& 1 \\0 & 2\end{pmatrix}\cdots \begin{pmatrix}3& 1 \\0 & 2\end{pmatrix}=\prod_{i=1}^{1000}\begin{pmatrix}3& 1 \\0 & 2\end{pmatrix}\\ AAA\cdots A &= Q\Lambda Q^{-1}Q\Lambda Q^{-1}Q\Lambda Q^{-1}\cdots Q\Lambda Q^{-1}=Q\prod_{i=1}^{1000}\begin{pmatrix}3& 1 \\0 & 2\end{pmatrix}Q^{-1}\\ AAA\cdots A &=Q\Lambda\Lambda\cdots \Lambda Q^{-1}= Q\begin{pmatrix}2^{1000} & 0 \\0 & 3^{1000}\end{pmatrix}Q^{-1} \end{align}\] The calculation is extremely simple using EVD.

Exercise

- Research how to compute EigenVectors and EigenValues, then compute\(\begin{pmatrix}1 & 2 & 3\\4 & 5 &6\\7 & 8 & 9\end{pmatrix}\).

- Think about the decisive factor affects how many EigenValues we can get.

Singular Value Decompositon

Notice that EigenVector Decomposition is applied to decompose square matrices. Is there any approach to decompose non-square matrices? The answer is a YES, and the name is Singular Value Decompositon.

Intuition

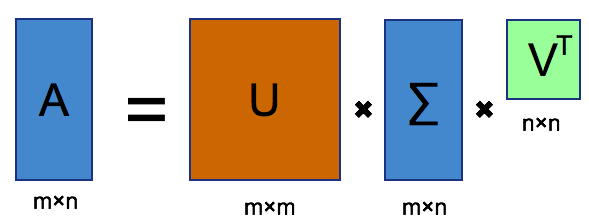

First of all, let's take a look at what SVD looks like  From the picture, we can find that matrice \(A\) is decomposed to 3 components: \(U\), \(\Sigma\) and \(V^{T}\). \(U\) and\(V^T\) are both sqaure matrices and \(\Sigma\) has the same size as \(A\). Still, I want to emphasize that \(U\) and\(V^T\) are both unitary matrix, which means

the Determinant of \(U\) and \(V^T\) is 1 and \(U^T=U^{-1}\quad V^T=V^{-1}\).

From the picture, we can find that matrice \(A\) is decomposed to 3 components: \(U\), \(\Sigma\) and \(V^{T}\). \(U\) and\(V^T\) are both sqaure matrices and \(\Sigma\) has the same size as \(A\). Still, I want to emphasize that \(U\) and\(V^T\) are both unitary matrix, which means

the Determinant of \(U\) and \(V^T\) is 1 and \(U^T=U^{-1}\quad V^T=V^{-1}\).

Deduction

In the Linear Transformation section, we can transform a vector to

another coordinate axes. Assume you have a non-square matrice, and you

want to transform A from vectors \(V=(\vec{v_1},

\vec{v_2},\cdots,\vec{v_n})^T\) to antoher coordinate axes which

is \(U=(\vec{u_1},

\vec{u_2},\cdots,\vec{u_n})^T\), the thing is, \(\vec{v_i}\) and \(\vec{u_i}\) have unit length, and all

directions are perpendicular, that is, each of \(\vec{v_i}\) are at right angles to other

\(\vec{v_j}\), we name such matrices as

orthogonal matrices. In addition, I need add a factor \(\Sigma=(\sigma_1,\sigma_2,

\sigma_3,\cdots,\sigma_n)\) which represent the times of each

direction of \(\vec{u_i}\), i.e., We

need transform A from \(V=(\vec{v_1},

\vec{v_2},\cdots,\vec{v_n})^T\) to \((\sigma_1 \vec{u_1},\sigma_2 \vec{u_2}, \sigma_3

\vec{u_3},...\sigma_n \vec{u_n})^T\). From the picture below we

can find that we want to transform from the circle coordinate axes to

the ellipse axes.  \[\begin{align}

\vec{v_1} \vec{v_2} \vec{v_3},...\vec{v_n} \qquad\rightarrow \qquad

&\vec{u_1},\vec{u_2},\vec{u_3},...\vec{u_n}\\

&\sigma_1,\sigma_2, \sigma_3,...\sigma_n

\end{align}\]

\[\begin{align}

\vec{v_1} \vec{v_2} \vec{v_3},...\vec{v_n} \qquad\rightarrow \qquad

&\vec{u_1},\vec{u_2},\vec{u_3},...\vec{u_n}\\

&\sigma_1,\sigma_2, \sigma_3,...\sigma_n

\end{align}\]

Recall that we can transform \(A\) at every direction, then generate another direction as new coordinate direction. So we have \[ A \vec{v_1}=\sigma_1 \vec{u_1}\\ A \vec{v_2}=\sigma_2 \vec{u_2}\\ \vdots\\ A \vec{v_j}=\sigma_j \vec{u_j}\]

\[\begin{align} &\begin{pmatrix}\\A\\\end{pmatrix}\begin{pmatrix}\\ \vec{v_1},\vec{v_2},\cdots,\vec{v_n}\\\end{pmatrix}=\begin{pmatrix}\\ \vec{u_1}, \vec{u_2},\cdots,\vec{u_n}\\ \end{pmatrix}\begin{pmatrix} \sigma_1 & 0 & \cdots & 0 \\ 0 & \sigma_2 & \cdots & 0 \\ \vdots & \vdots & \ddots & \vdots \\ 0 & 0 & \cdots & \sigma_n \end{pmatrix}\\ &C^{m\times n}\qquad\quad C^{n\times n}\qquad\qquad\qquad C^{m\times n}\qquad \qquad \qquad C^{n\times n} \end{align}\] Which is \[A_{m\times n}V_{n\times n} = \hat{U}_{m\times n}\hat{\Sigma}_{n\times n}\]

\[\begin{align} A_{m\times n}V_{n\times n} &= \hat{U}_{m\times n}\hat{\Sigma}_{n\times n}\\ (A_{m\times n}V_{n\times n}V_{n\times n}^{-1} &= \hat{U}_{m\times n}\hat{\Sigma}_{n\times n}V_{n\times n}^{-1}\\ A_{m\times n}&=\hat{U}_{m\times n}\hat{\Sigma}_{n\times n}V_{n\times n}^{-1}\\&=\hat{U}_{m\times n}\hat{\Sigma}_{n\times n}V_{n\times n}^{T} \end{align}\]

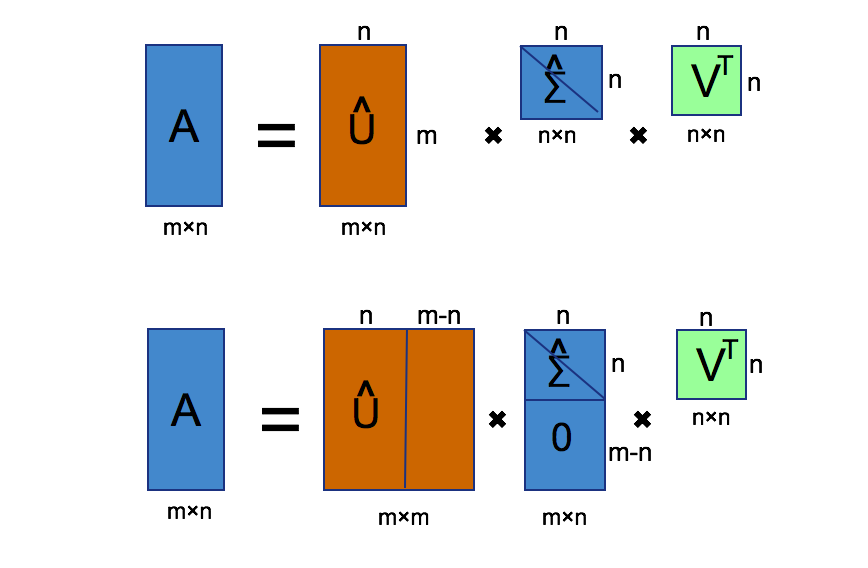

We need do something to the equation in order to continue the

deduction. First we stretch matrice \(\hat{\Sigma}\) vertically to \(m \times n\) size. Then stretch \(\hat{U}\) horizonly to \(m\times m\), we can set any value to the

right entries.

Due to the fact we need calculate \(U^{-1}\) and \(V^{-1}\), the equation is adjusted to \[A_{m\times n} = U_{m\times m}\Sigma_{m\times n}V^T_{n\times n}\] For furture convenience, we need sort all \(\sigma s\), which means: \[\sigma_1\geq\sigma_2\geq\sigma_3 \geq\cdots\geq \sigma_m\]. #### How to calculate \(U\), \(V^T\) and \(\Sigma\) To Decompose matrice \(A\), we need calculate \(U\), \(V^T\) and \(\Sigma\). Remember that \(U^T = U^{-1}\) and \(V^T = V^{-1}\), we will use the property next.

\[\begin{align} A &= U\Sigma V^T\\ \end{align}\]

\[\begin{align} AA^T&=U\Sigma V^T(U\Sigma V^T)^T\\ &=U\Sigma V^TV\Sigma^T U^T\\ &=U\Sigma V^{-1}V\Sigma^T U^T\\ &=U\Sigma I\Sigma^T U^T\\ &=U\Sigma^2 U^T \end{align}\]

\[\begin{align} (AA^T)U&=(U\Sigma^2 U^T)U\\ &=(U\Sigma^2 )U^{-1}U\\ &=U\Sigma^2 \end{align}\]

\[\begin{align} A^TA &=(U\Sigma V^T)^TU\Sigma V^T\\ &=V\Sigma^T U^TU\Sigma V^T\\ &=V\Sigma^T U^TU\Sigma V^T\\ &=V\Sigma^T U^{-1}U\Sigma V^T\\ &=V\Sigma^T I\Sigma V^T\\ &=V\Sigma^2 V^T\\ \end{align}\]

\[\begin{align}(A^TA)V&=(V\Sigma^2 V^T)V\\ &=(V\Sigma^2)V^{-1}V\\ &=V\Sigma^2 \end{align}\]

Image Compression

Firstly, let's look at the process of compressing a picture, the left

picture is original grayscale image. On the right, under different

compress rate, we can see pictures after reproducing. Before compress,

the size of the picture is 1775K byte. Then the picture is almost the

same, when we compress which into 100K byte size, which means we can

save 90% storage space

To compress a piture, you just decompose the matrice through SVD,

then instead of using the original \(U_{m\times m}\), \(\Sigma_{m\times n}\) and \(U_{n\times n}\), we shrink every matrice to

new size \(U_{m\times r}\), \(\Sigma_{r\times r}\) and \(U_{r\times n}\). The final \(size(R)\) is still \(m\times n\), but we abandon some entries

since these entries are not so important than these we have reserved.

1 | %% octave: core code of svd compressions |

Summary

Today we have learned mathmatics backgroud on SVD, including linear transformation and EigenVector&EigenVaule. Before SVD, we first talked about EigenValue Decomposition. Finally, Singular Vaule Decomposition is very easy to be deduced. In the last section, we took an example see how SVD be applied to image compression field.

Now, it comes to the topic how to save our storage of a 32G iPhone7, the coclusion is obvious: using SVD compress image to shrink the size of our photos.

Reference

- https://www.youtube.com/watch?v=EokL7E6o1AE

- https://www.youtube.com/watch?v=cOUTpqlX-Xs

- https://www.youtube.com/channel/UCYO_jab_esuFRV4b17AJtAw

- https://yhatt.github.io/marp/

- https://itunes.apple.com/cn/itunes-u/linear-algebra/id354869137

- http://www.ams.org/samplings/feature-column/fcarc-svd

- https://www.cnblogs.com/LeftNotEasy/archive/2011/01/19/svd-and-applications.html